In the world of cloud computing and DevOps, applications are expected to scale seamlessly, run reliably, and be deployed quickly across multiple environments. While tools like Docker revolutionized how we package and ship applications using containers, managing containers at scale presents a new challenge. Imagine running hundreds or even thousands of containers across multiple servers—how do you manage deployments, scaling, load balancing, and self-healing?

The answer is Kubernetes (K8s).

Kubernetes has become the de facto standard for container orchestration, allowing organizations to run containerized applications at scale in production environments. Backed by Google’s expertise and a massive open-source community, Kubernetes is at the heart of the cloud-native movement and plays a critical role in modern DevOps practices.

In this blog post, we’ll explore Kubernetes in detail—its history, architecture, core components, benefits, real-world use cases, and best practices. By the end, you’ll understand why Kubernetes is one of the most powerful tools in the DevOps ecosystem and how it enables scalable, reliable, and automated application management.

What is Kubernetes?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications.

The name originates from the Greek word “Kubernetes,” meaning “helmsman” or “pilot,” symbolizing its role in steering containers across a cluster of machines.

Kubernetes ensures that:

- Applications run where they are supposed to.

- Containers are automatically restarted if they fail.

- Traffic is balanced across multiple containers.

- Resources are efficiently allocated across the cluster.

In simple words:

- Docker solves the problem of packaging and running applications in containers.

- Kubernetes solves the problem of running many containers across distributed environments reliably.

A Brief History of Kubernetes

- 2003–2014: Google developed internal systems like Borg and Omega to manage containers at scale.

- 2014: Google open-sourced Kubernetes.

- 2015: Kubernetes v1.0 was released under the Cloud Native Computing Foundation (CNCF).

- 2017 onwards: Kubernetes adoption exploded, supported by AWS, Azure, GCP, and almost every major tech company.

- Today: Kubernetes is the backbone of cloud-native application delivery, powering microservices, CI/CD pipelines, and hybrid cloud platforms.

Why Kubernetes is Important in DevOps

Kubernetes aligns perfectly with DevOps principles of automation, scalability, and resilience. Here’s why it’s essential:

- Automated Deployment and Scaling – Spin up or scale down containers based on demand.

- High Availability – Ensures apps stay online by automatically restarting failed containers.

- Portability – Runs across on-premises, hybrid, and multi-cloud environments.

- Load Balancing – Distributes traffic evenly to avoid overload.

- Infrastructure as Code – Configurations are defined as YAML/JSON, enabling repeatability.

- Microservices Support – Kubernetes is designed to run large-scale microservices applications.

Kubernetes Architecture

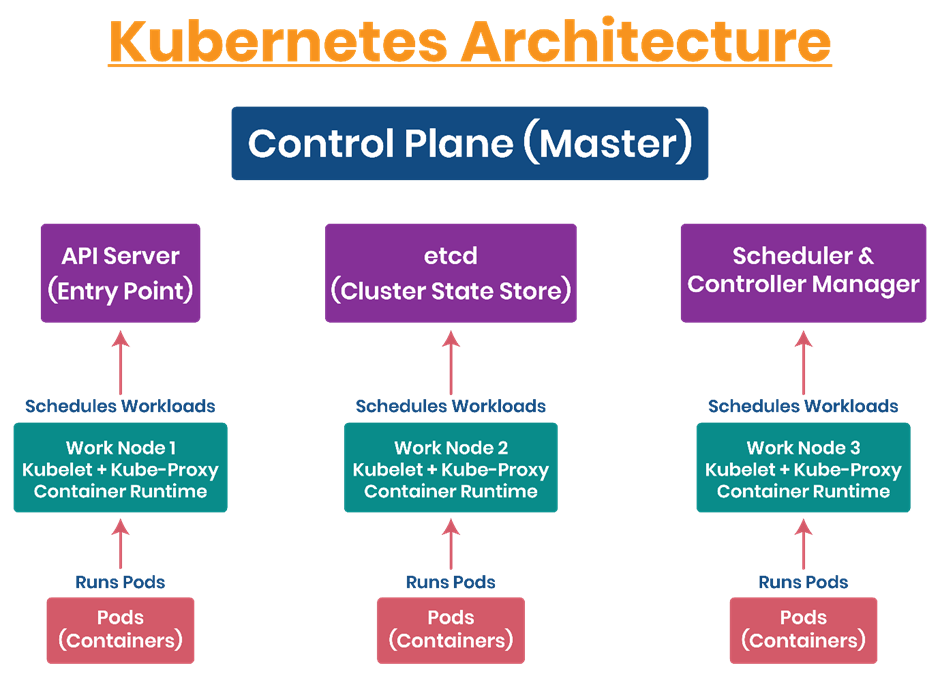

Kubernetes follows a master-worker (control plane–node) architecture.

1. Control Plane (Master Components)

Responsible for managing the cluster:

- API Server: Central management hub for communication.

- etcd: Key-value store for cluster state and configuration.

- Controller Manager: Ensures cluster state matches desired configuration.

- Scheduler: Assigns containers (pods) to worker nodes.

2. Worker Nodes

Where applications (containers) run. Components include:

- Kubelet: Agent that communicates with the control plane.

- Kube-Proxy: Manages networking and load balancing.

- Container Runtime: Runs containers (Docker, containerd, CRI-O).

3. Pods

The smallest deployable unit in Kubernetes. A pod may contain one or more containers that share storage and networking.

4. Services

Abstractions that define how to expose pods to external or internal traffic.

5. Deployments

High-level objects that manage replicas of pods, ensuring availability and scalability.

How Kubernetes Works

- A developer defines the desired state of an application in a YAML manifest (e.g., 3 replicas of a web app).

- The API Server stores this configuration in etcd.

- The Scheduler assigns pods to nodes based on resources.

- The Kubelet ensures pods are running on assigned nodes.

- If a pod fails, the Controller Manager creates a replacement automatically.

- Services route user traffic to the correct pods.

This continuous reconciliation ensures that the actual state matches the desired state.

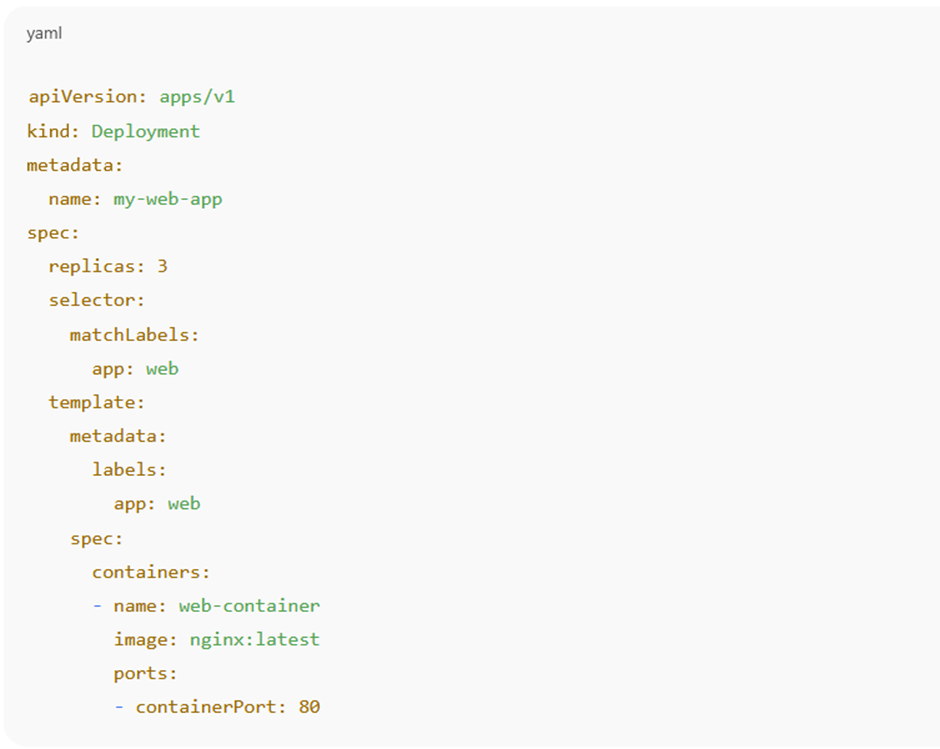

Kubernetes Example: Deployment YAML

Here’s a simple Kubernetes deployment for a web application:

This deployment ensures that 3 replicas of an Nginx container are always running.

Key Features of Kubernetes

- Self-Healing: Restarts failed containers automatically.

- Horizontal Scaling: Scale applications up/down based on CPU or memory usage.

- Service Discovery & Load Balancing: Provides DNS names and load balances requests.

- Automated Rollouts & Rollbacks: Gradually update applications without downtime.

- Storage Orchestration: Attach persistent volumes to containers.

- Secret & Config Management: Manage sensitive data securely.

- Multi-Cloud & Hybrid Deployments: Runs anywhere—on AWS, Azure, GCP, or on-prem.

Benefits of Kubernetes

- Consistency Across Environments – Same deployment process in dev, test, and prod.

- Better Resource Utilization – Efficiently uses cluster resources.

- Resilience & Fault Tolerance – Keeps applications running automatically.

- Vendor Neutral – Runs on any cloud or on-premises system.

- Supports CI/CD – Works with Jenkins, GitLab CI, and ArgoCD for automation.

- Cost Savings – Optimized resource usage reduces infrastructure costs.

Kubernetes in DevOps Pipelines

A typical CI/CD pipeline with Kubernetes looks like this:

- Code Commit → Developer pushes code to Git.

- Build → CI tool builds Docker image.

- Push → Image pushed to container registry.

- Deploy → Kubernetes applies the deployment YAML to the cluster.

- Scaling & Monitoring → Kubernetes automatically scales and monitors workloads.

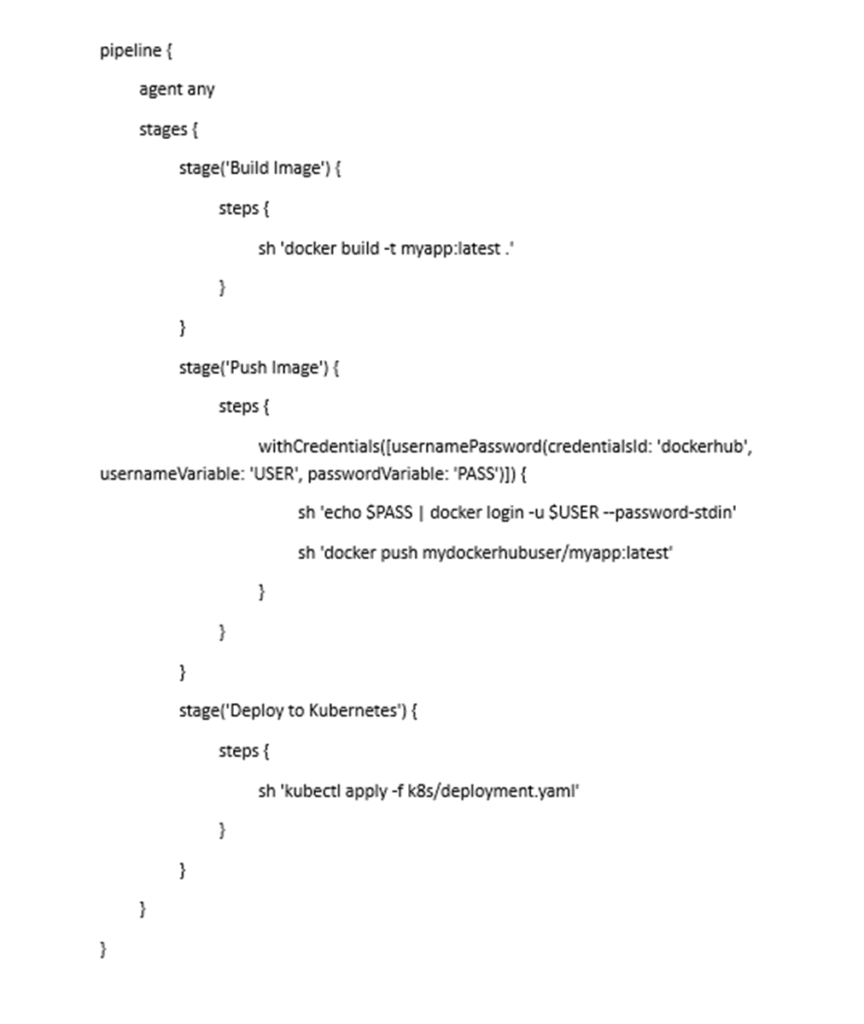

Example Jenkins pipeline with Kubernetes:

Real-World Use Cases of Kubernetes

- Microservices Applications – Running distributed microservices with independent scaling.

- E-commerce Platforms – Handling traffic spikes during sales events.

- Streaming & Media – Managing workloads for real-time video/audio streaming.

- Finance & Banking – Secure and highly available transaction systems.

- AI/ML Workloads – Scaling machine learning models and data pipelines.

- Gaming – Real-time multiplayer games with millions of users.

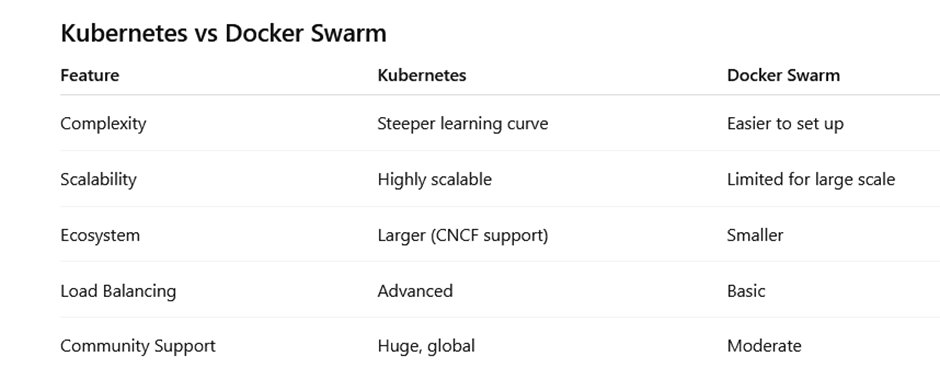

Challenges with Kubernetes

- Steep Learning Curve – YAML manifests, networking, and security can be complex.

- Operational Overhead – Requires monitoring and resource optimization.

- Security Concerns – Misconfigured clusters may expose vulnerabilities.

- Cost Management – Can become expensive if resources are not optimized.

- Tooling Complexity – Integrating Kubernetes with CI/CD, monitoring, and logging requires expertise.

Best Practices with Kubernetes

- Use Namespaces to isolate environments.

- Store configurations in ConfigMaps and sensitive data in Secrets.

- Apply resource limits to containers to prevent resource hogging.

- Automate deployments with Helm charts or Kustomize.

- Enable monitoring with Prometheus + Grafana.

- Use Network Policies for secure communication between pods.

- Regularly update Kubernetes and patch vulnerabilities.

The Future of Kubernetes

Kubernetes is evolving rapidly with features like serverless workloads, GitOps, and AI-driven scaling. The ecosystem around Kubernetes (Helm, ArgoCD, Istio, Knative) is expanding, making it more powerful.

With the rise of cloud-native architectures, Kubernetes will continue to be the foundation for application deployment and management in the foreseeable future.

Conclusion

Kubernetes has redefined how we manage applications in the cloud era. By providing automation, scalability, resilience, and consistency, it has become the gold standard for container orchestration.

Whether you’re running a small startup application or managing enterprise-scale microservices, Kubernetes ensures your workloads are deployed reliably and scale seamlessly.

As DevOps practices continue to evolve, Kubernetes remains at the heart of the transformation, empowering organizations to build and deliver software faster, smarter, and more efficiently.

Kubernetes: The Ultimate Guide to Container Orchestration in DevOps – FAQs

What Is Kubernetes and Why Is It Used?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It helps developers manage thousands of containers efficiently across distributed environments.

How Is Kubernetes Different From Docker?

Docker is used to build and run individual containers, while Kubernetes manages and orchestrates multiple containers across clusters, ensuring scalability, reliability, and self-healing.

What Are the Main Components of Kubernetes Architecture?

Kubernetes architecture includes the Control Plane (API Server, etcd, Controller Manager, Scheduler) and Worker Nodes (Kubelet, Kube-Proxy, and Container Runtime), which work together to manage applications.

What Is a Pod in Kubernetes?

A Pod is the smallest deployable unit in Kubernetes. It can contain one or more containers that share networking, storage, and runtime environments.

How Does Kubernetes Ensure High Availability?

Kubernetes automatically restarts failed containers, replicates pods across nodes, and balances network traffic to ensure applications remain available even during failures.

What Is the Role of etcd in Kubernetes?

etcd is a distributed key-value store that maintains the cluster’s configuration, state, and metadata. It acts as the source of truth for Kubernetes.

What Are Deployments in Kubernetes?

Deployments define how applications are deployed and managed. They control replica sets, perform rolling updates, and ensure that the desired number of pods are always running.

What Is the Difference Between a Service and a Pod in Kubernetes?

A Pod runs the actual application containers, whereas a Service exposes those Pods to internal or external network traffic using a stable IP and DNS name.

How Does Kubernetes Support DevOps Practices?

Kubernetes aligns with DevOps through automation, continuous deployment, scalability, and monitoring. It simplifies integration with CI/CD tools like Jenkins, GitLab, and ArgoCD.

What Is Container Orchestration in DevOps?

Container orchestration automates the deployment, management, and scaling of containers across multiple environments — ensuring reliability, load balancing, and resource optimization.

How Does Kubernetes Manage Scaling?

Kubernetes supports horizontal scaling, automatically adding or removing container replicas based on metrics like CPU or memory usage to meet real-time demand.

What Are Some Real-World Use Cases of Kubernetes?

Kubernetes is used in e-commerce, banking, AI/ML, media streaming, and gaming industries to manage scalable, fault-tolerant, and high-performance applications.

What Challenges Do Teams Face With Kubernetes?

Common challenges include steep learning curves, complex YAML configurations, resource optimization, cost control, and integrating monitoring and security tools effectively.

What Are Kubernetes Secrets and ConfigMaps?

Secrets store sensitive information (like passwords or tokens) securely, while ConfigMaps manage configuration data used by applications.

What Tools Complement Kubernetes in DevOps Pipelines?

Popular tools include Helm for package management, Prometheus and Grafana for monitoring, Istio for service mesh, and ArgoCD for GitOps-based deployments.

What Is a Typical CI/CD Workflow With Kubernetes?

Code is committed → CI tool builds Docker image → Image pushed to registry → Kubernetes applies deployment YAML → Application is deployed → Kubernetes monitors and scales automatically.

How Does Kubernetes Enable Microservices Architecture?

Kubernetes isolates and manages each microservice independently, allowing teams to scale, update, and deploy services without affecting the entire system.

What Are Kubernetes Namespaces Used For?

Namespaces logically divide a cluster into isolated environments (like dev, test, and production), improving resource management and access control.

How Can Security Be Managed in Kubernetes Clusters?

Best practices include using Role-Based Access Control (RBAC), Network Policies, Secrets, regular updates, and vulnerability scanning of images and configurations.

What Is the Future of Kubernetes?

Kubernetes is expanding into areas like serverless computing, GitOps, AI-driven scaling, and hybrid cloud orchestration, making it central to modern cloud-native infrastructure.