In today’s software development world, speed, scalability, and consistency are essential. Organizations can no longer afford to spend weeks configuring environments or months testing software on different operating systems. Developers want to build, ship, and run applications anywhere without worrying about infrastructure differences. This is where Docker revolutionized the industry with containerization.

Docker has become one of the most influential tools in DevOps, enabling developers and operations teams to build, test, and deploy applications faster and more reliably. By encapsulating applications and their dependencies into portable, lightweight containers, Docker eliminates the infamous “works on my machine” problem. In this blog post, we’ll explore Docker in detail—its history, architecture, key features, advantages, real-world use cases, and how it fits into DevOps workflows. By the end, you’ll understand why Docker is considered the backbone of modern application development and deployment.

What is Docker?

Docker is an open-source platform that allows developers to build, ship, and run applications inside containers. A container is a lightweight, standalone, and executable package that includes everything needed to run an application—code, libraries, system tools, runtime, and configuration files.

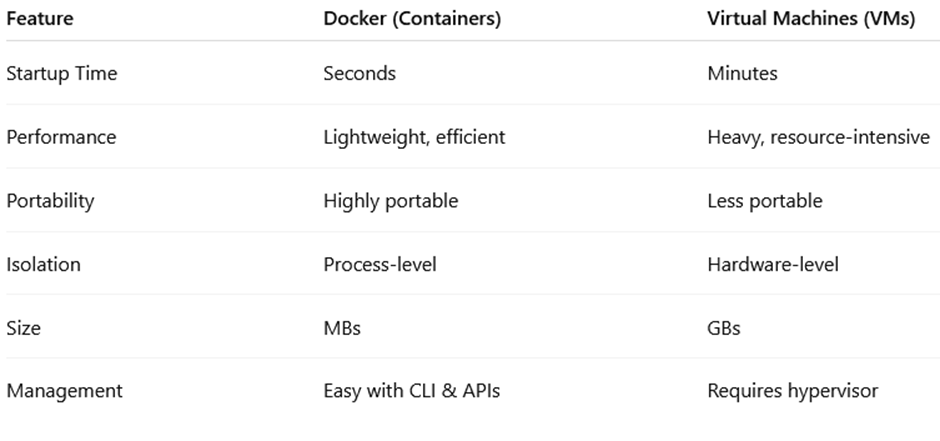

Unlike traditional virtual machines (VMs), which emulate entire operating systems, Docker containers share the host OS kernel, making them faster, more efficient, and portable.

To put it simply:

- Without Docker, → Developers struggle with environment setup and compatibility issues.

- With Docker → Developers package the application once and run it anywhere—on laptops, servers, or the cloud—without modification.

Why Docker Matters in DevOps

Docker plays a central role in the DevOps ecosystem by bridging the gap between development and operations. It enables faster builds, testing, and deployments, aligning perfectly with the DevOps principles of automation, consistency, and scalability.

Here’s why Docker is crucial in DevOps:

- Environment Consistency – Applications run the same way across development, testing, and production.

- Faster Deployment – Containers start in seconds, reducing downtime.

- Microservices Support – Docker is the foundation of modern microservices architectures.

- Resource Efficiency – Containers use fewer resources than VMs.

- CI/CD Integration – Works seamlessly with Jenkins, GitLab CI/CD, and Kubernetes.

A Brief History of Docker

- 2013: Docker was introduced by Solomon Hykes at PyCon as part of the dotCloud platform.

- 2014: Docker became open source and quickly gained massive adoption.

- 2015: Docker Inc. shifted focus entirely to containerization.

- 2017: Kubernetes and Docker together defined the cloud-native ecosystem.

- Today: Docker remains the most widely used containerization platform, supported by cloud providers like AWS, Azure, and Google Cloud.

Docker Architecture

Docker follows a client-server architecture with several key components:

1. Docker Client

The command-line interface (docker) is used by developers to interact with Docker. Commands like docker build, docker run, and docker pull are sent to the Docker daemon.

2. Docker Daemon (dockerd)

The background service that manages Docker containers, images, networks, and volumes. It listens to API requests and executes them.

3. Docker Images

A Docker image is a blueprint for a container. It contains the application code, libraries, dependencies, and configuration. Images are immutable and versioned.

4. Docker Containers

A running instance of a Docker image. Containers are isolated, portable, and can be started, stopped, or deleted easily.

5. Docker Registry

A centralized place to store and distribute Docker images.

- Docker Hub (public registry)

- Private Registries (e.g., Nexus, Artifactory, Harbor)

6. Docker Engine

The core of Docker that combines the client, daemon, and API to build and run containers.

How Docker Works

- A developer writes a Dockerfile (a set of instructions for building an image).

- Docker uses the Dockerfile to create an image.

- The image is pushed to a registry (like Docker Hub).

- When needed, the image is pulled from the registry and run as a container.

- Containers run on the host OS but remain isolated from one another.

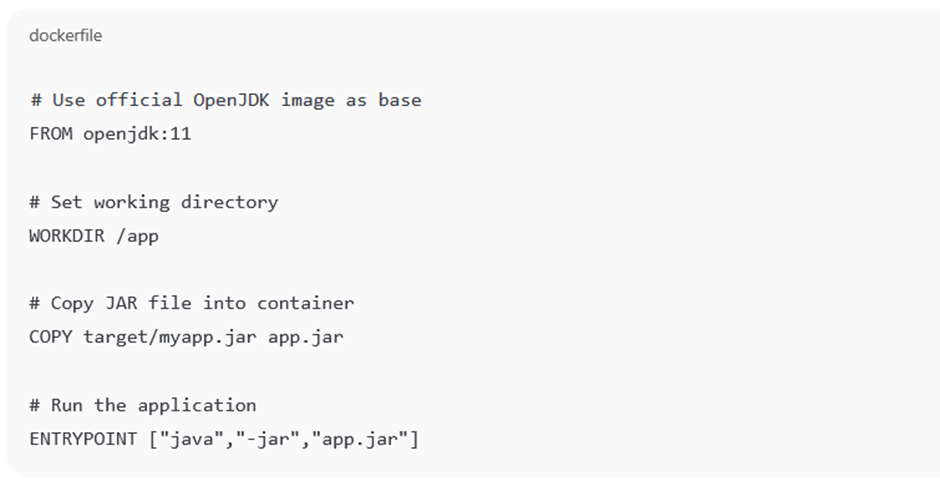

Dockerfile Example

Here’s a simple Dockerfile for a Java application:

This Dockerfile packages a Java application into an image that can run in any environment.

Key Features of Docker

- Lightweight – Containers share the host OS kernel, making them faster than VMs.

- Portable – Run anywhere: local machine, on-premises servers, or cloud.

- Scalable – Easily scale applications horizontally by running multiple containers.

- Version Control – Images can be tagged and versioned.

- Isolation – Each container runs independently with its own environment.

- Networking & Storage – Containers can communicate securely and use persistent storage.

- Open Source & Extensible – Supports plugins and integrates with many tools.

Benefits of Docker

- Simplifies DevOps Workflows – Standardizes environments across teams.

- Accelerates Time-to-Market – Speeds up deployment cycles.

- Reduces Costs – Optimizes hardware resource usage.

- Improves Developer Productivity – Faster testing and debugging.

- Enhances Security – Isolated containers reduce risks.

- Supports Legacy Applications – Wrap older apps in containers for easier deployment.

Docker vs Virtual Machines:

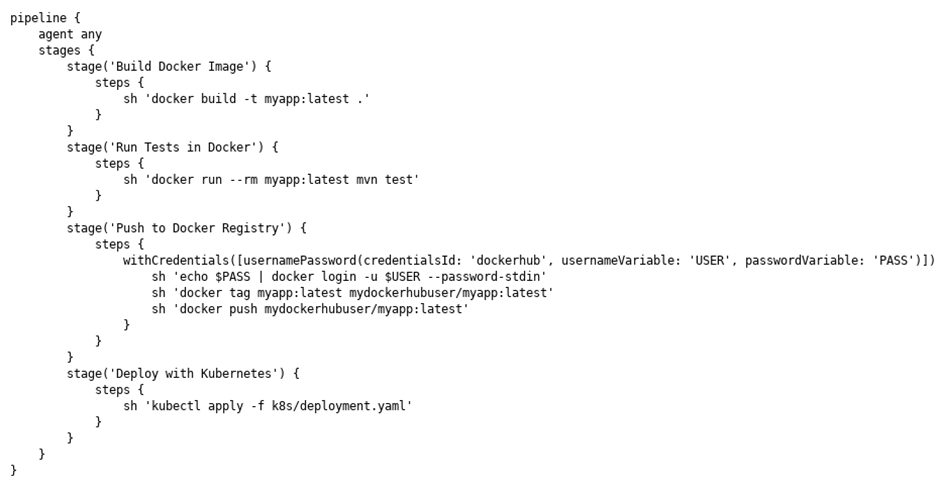

Docker in DevOps Pipelines

Docker integrates seamlessly with CI/CD pipelines:

- Code Commit → Developer pushes code to GitHub/GitLab.

- Build → CI server (Jenkins, GitLab CI) builds Docker image.

- Test → Automated tests run inside containers.

- Push → Image pushed to Docker Hub or private registry.

- Deploy → Kubernetes or Docker Swarm deploys containers to production.

Example Jenkins pipeline with Docker:

Real-World Use Cases of Docker

- Microservices Architecture – Each microservice runs in its own container.

- Testing Environments – QA teams spin up disposable test environments.

- Hybrid Cloud Deployments – Applications run across multiple cloud providers.

- CI/CD Pipelines – Automated builds and deployments.

- Legacy Modernization – Wrapping old apps into containers for easier portability.

- Big Data & AI – Run ML models and data pipelines in isolated containers.

Challenges with Docker

- Security Risks – Poorly configured containers may expose vulnerabilities.

- Networking Complexity – Managing container networks at scale is challenging.

- Data Persistence – Containers are stateless by default.

- Learning Curve – Requires understanding images, volumes, networks, and orchestration.

- Scaling Beyond Single Host – Requires orchestration tools like Kubernetes or Swarm.

Best Practices for Docker

- Write minimal Dockerfiles (use lightweight base images like Alpine).

- Use .dockerignore to exclude unnecessary files.

- Regularly scan images for vulnerabilities.

- Tag images properly with versions.

- Store images in private registries for security.

- Use multi-stage builds for optimized images.

- Avoid running containers as root.

- Automate with CI/CD pipelines.

Docker and Kubernetes

While Docker provides containerization, Kubernetes provides orchestration. Together, they power modern cloud-native applications:

- Docker builds and runs containers.

- Kubernetes manages container deployment, scaling, and networking.

The Future of Docker

The containerization ecosystem continues to evolve. While Docker remains the standard for building and running containers, new tools like Podman and CRI-O are gaining traction. Still, Docker’s simplicity, community support, and integration make it the go-to choice for developers. With cloud-native technologies and microservices dominating, Docker will continue to play a vital role in shaping the future of software delivery.

Conclusion

Docker transformed software development by making applications portable, consistent, and efficient. It is not just a tool—it is a cultural shift in how teams build and deploy software.

From startups to Fortune 500 companies, Docker is at the heart of DevOps, enabling faster innovation, reduced costs, and improved collaboration. Whether you’re building microservices, deploying AI workloads, or modernizing legacy systems, Docker empowers you to do it all with ease. In today’s competitive landscape, where speed and agility define success, Docker stands tall as the foundation of containerization and modern DevOps practices.

Docker: The Power of Containerization in DevOps – FAQs

What Is Docker, and Why Is It Important in DevOps?

Docker is an open-source platform that allows applications to run inside containers. It is important in DevOps because it ensures consistency, speeds up deployment, and simplifies workflows across development, testing, and production.

How Is a Docker Container Different From a Virtual Machine (VM)?

Unlike VMs, which emulate an entire operating system, Docker containers share the host OS kernel. This makes containers lightweight, faster to start, and more resource-efficient.

What Problem Does Docker Solve for Developers?

Docker eliminates the “works on my machine” problem by packaging code, dependencies, and environment settings into a portable container that runs anywhere.

What Are the Main Components of Docker Architecture?

Docker architecture includes the Docker Client, Docker Daemon, Docker Images, Docker Containers, Docker Registry (e.g., Docker Hub), and Docker Engine.

What Is a Docker Image, and How Is It Different From a Container?

A Docker image is a read-only blueprint of an application with dependencies. A container is a running instance of that image, isolated but lightweight.

What Role Does Docker Play in CI/CD Pipelines?

Docker integrates into CI/CD workflows by providing consistent build, test, and deployment environments. It works seamlessly with tools like Jenkins, GitLab CI, and Kubernetes.

Why Is Docker Considered Essential for Microservices?

Docker allows each microservice to run independently in its own container, simplifying scaling, updating, and deploying services without impacting others.

What Are Some Real-World Use Cases of Docker?

Common use cases include microservices, disposable testing environments, hybrid cloud deployments, legacy app modernization, CI/CD automation, and AI/ML workloads.

How Does Docker Improve Environment Consistency?

Docker ensures that applications run identically across development, QA, and production environments, avoiding configuration drift.

What Are the Advantages of Docker Over Traditional Deployment Methods?

Advantages include faster deployments, lower resource usage, scalability, portability, and easier integration with DevOps tools.

What Is a Dockerfile, and Why Is It Important?

A Dockerfile is a text file containing instructions to build a Docker image. It defines the application environment in a repeatable and automated way.

How Secure Is Docker for Enterprise Use?

Docker enhances security through container isolation but requires best practices like regular image scanning, using private registries, and avoiding root users.

What Are the Limitations or Challenges of Docker?

Challenges include managing container networks, ensuring persistent data storage, scaling beyond a single host, and handling security vulnerabilities.

How Does Docker Integrate With Kubernetes?

Docker provides the container runtime, while Kubernetes orchestrates deployment, scaling, load balancing, and networking across clusters of containers.

What Are Docker Registries, and Why Are They Needed?

Registries store and distribute Docker images. Public options include Docker Hub, while enterprises often use private registries for security and control.

How Does Docker Support Legacy Applications?

Docker can wrap older applications into containers, making them easier to deploy on modern infrastructure without major rewrites.

What Are Some Best Practices for Using Docker Effectively?

Best practices include using lightweight base images, scanning for vulnerabilities, tagging images, securing registries, using .dockerignore, and adopting multi-stage builds.

What Industries Benefit Most From Docker Adoption?

Industries such as IT services, e-commerce, fintech, healthcare, and AI research benefit by reducing deployment times and enabling scalable cloud-native apps.

What Is the Future of Docker in the Container Ecosystem?

Docker will continue to dominate as the standard for containerization, though it coexists with alternatives like Podman and orchestration systems like Kubernetes.

How Has Docker Impacted Modern DevOps Culture?

Docker shifted DevOps towards automation, faster feedback loops, cross-team collaboration, and cloud-native practices, making it the backbone of modern software delivery.